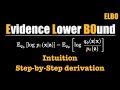

Variational Inference and ELBO Concepts

Interactive Video

•

Computers

•

University

•

Hard

Thomas White

FREE Resource

Read more

7 questions

Show all answers

1.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the primary purpose of ELBO in variational inference?

To compute exact posterior distributions

To serve as a loss function

To maximize the likelihood of data

To eliminate the need for prior distributions

2.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

Why is the denominator in Bayes rule problematic in high-dimensional spaces?

It increases computational speed

It simplifies the computation

It results in an intractable integral

It leads to overfitting

3.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the role of the approximation distribution Q in variational inference?

To approximate the posterior

To replace the prior distribution

To eliminate the need for sampling

To exactly match the posterior

4.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

Why is a loss function necessary in optimization procedures?

To increase the complexity of the model

To eliminate the need for data

To compute the dissimilarity between predictions and ground truth

To simplify the model

5.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the main challenge with KL divergence in its current form?

It does not require any parameters

It is always negative

It cannot compute the denominator

It is too simple

6.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What does the final simplification of ELBO reveal about its components?

It consists of only KL divergence

It includes expected error in reconstruction and KL divergence

It eliminates the need for prior distributions

It only focuses on the likelihood

7.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

Which algorithms are most famous for variational inference?

Random Forest and SVM

Gradient Descent and Backpropagation

K-Means and PCA

Expectation Maximization and Variational Autoencoder

Similar Resources on Wayground

6 questions

Here Are Two Charts That Show All Is Not Well in Markets

Interactive video

•

University

6 questions

Sheets: A Test of Divergence in Global Bond Markets

Interactive video

•

University

6 questions

Does BOJ Have the Tools to Provide Further Easing?

Interactive video

•

University

6 questions

Japanese Markets Have More Pickup for Investors: Craig

Interactive video

•

University

2 questions

Recommender Systems: An Applied Approach using Deep Learning - Overview

Interactive video

•

University

3 questions

Recommender Systems Complete Course Beginner to Advanced - Deep Learning Foundation for Recommender Systems: VAE Collabo

Interactive video

•

11th Grade - University

2 questions

Recommender Systems Complete Course Beginner to Advanced - Deep Learning Recommender Systems

Interactive video

•

University

6 questions

Bloomberg Intelligence's 'Equity Market Minute' 11/18/2021

Interactive video

•

University

Popular Resources on Wayground

10 questions

Video Games

Quiz

•

6th - 12th Grade

20 questions

Brand Labels

Quiz

•

5th - 12th Grade

15 questions

Core 4 of Customer Service - Student Edition

Quiz

•

6th - 8th Grade

15 questions

What is Bullying?- Bullying Lesson Series 6-12

Lesson

•

11th Grade

25 questions

Multiplication Facts

Quiz

•

5th Grade

15 questions

Subtracting Integers

Quiz

•

7th Grade

22 questions

Adding Integers

Quiz

•

6th Grade

10 questions

Exploring Digital Citizenship Essentials

Interactive video

•

6th - 10th Grade

Discover more resources for Computers

20 questions

Definite and Indefinite Articles in Spanish (Avancemos)

Quiz

•

8th Grade - University

7 questions

Force and Motion

Interactive video

•

4th Grade - University

36 questions

Unit 5 Key Terms

Quiz

•

11th Grade - University

7 questions

Figurative Language: Idioms, Similes, and Metaphors

Interactive video

•

4th Grade - University

15 questions

Properties of Equality

Quiz

•

8th Grade - University

38 questions

WH - Unit 3 Exam Review*

Quiz

•

10th Grade - University

21 questions

Advise vs. Advice

Quiz

•

6th Grade - University

12 questions

Reading a ruler!

Quiz

•

9th Grade - University